Scrappy Parsing

I love parsing! I really do. There's something very satisfying about converting input into usable data structures that help me accomplish some task.

Parsing is important. How well we construct those data structures and the quality of the structures we choose can massively impact the work that comes after. We should probably all be worrying about parsing a little more than we do.

The good news is that Elixir is the best language I have ever worked with for doing serious parsing. Let's prove it. Let's pull the data out of a SQLite database file using vanilla Elixir and some tricks from my Scrappy Programmer Livebook series. (You don't need to have read that to follow along with this article. Everyone is welcome.)

Genie, Fetch Me Some Data

We need a database, with some data in it, in order to have something interesting to parse. Let's programmatically create one. This is the one time we will reach for some helper libraries. We'll use one to talk directly to SQLite and another to invent fake data. Here's the code:

Mix.install([

{:exqlite, "~> 0.23.0"},

{:faker, "~> 0.18.0"}

])

db_path = Path.join(System.tmp_dir!(), "db.sqlite3")

{:ok, conn} = Exqlite.Sqlite3.open(db_path)

:ok =

Exqlite.Sqlite3.execute(conn, """

CREATE TABLE people (

id INTEGER PRIMARY KEY,

name TEXT NOT NULL,

age INTEGER NOT NULL,

email TEXT NOT NULL

);

CREATE UNIQUE INDEX person_email ON people(email);

""")

{:ok, statement} =

Exqlite.Sqlite3.prepare(

conn,

"INSERT INTO people (name, age, email) VALUES (?1, ?2, ?3)"

)

Stream.repeatedly(fn ->

name = Faker.Person.name()

account =

name

|> String.downcase()

|> String.replace(~r{[^a-z]+}, "_")

|> String.replace(~r{_\z}, "")

[name, Enum.random(10..100//1), "#{account}@#{Faker.Internet.domain_name()}"]

end)

|> Stream.take(150)

|> Enum.each(fn values ->

:ok = Exqlite.Sqlite3.bind(conn, statement, values)

:done = Exqlite.Sqlite3.step(conn, statement)

end)

:ok = Exqlite.Sqlite3.release(conn, statement)

IO.puts("Database path: #{db_path}")Database path: /tmp/db.sqlite3 :ok

We're not really here to discuss this code, but, very briefly, it creates a new database table with four fields, adds on an index, and pumps 150 fake records into it. The only things in this code that effect what we are about to do are the fields in the table and index as well as the db_path variable. That holds the location of our database file in the temporary directory.

Let's now use the real SQLite to browse through what we've created:

sqlite> SELECT * FROM people LIMIT 10; 1|Margret Ebert|39|margret_ebert@bahringer.net 2|Thad Pouros|74|thad_pouros@rodriguez.com 3|Susana Torp|50|susana_torp@ebert.biz 4|Mr. Michele Stroman|84|mr_michele_stroman@white.name 5|Kenna Okuneva|49|kenna_okuneva@koch.biz 6|Irwin Kohler|61|irwin_kohler@ledner.com 7|Daren Keeling|89|daren_keeling@collins.name 8|Viola Beer V|73|viola_beer_v@bayer.org 9|Edward Cummerata|47|edward_cummerata@lockman.biz 10|Nathan Halvorson|98|nathan_halvorson@kuhlman.biz sqlite> SELECT * FROM people WHERE age >= 97; 10|Nathan Halvorson|98|nathan_halvorson@kuhlman.biz 27|Colten Kub DDS|98|colten_kub_dds@nolan.info 45|Randy Grimes|100|randy_grimes@grant.com 46|Mrs. Madisen Bashirian DVM|100|mrs_madisen_bashirian_dvm@prosacco.name 59|Paula Larkin|99|paula_larkin@abshire.com 63|Barney Walker|98|barney_walker@dooley.com 80|Daphney Carroll|100|daphney_carroll@jast.name 94|Rickey Balistreri DVM|97|rickey_balistreri_dvm@kuvalis.info 100|Bradford Schowalter|100|bradford_schowalter@okeefe.biz sqlite> SELECT * FROM people WHERE id = 77; 77|Samanta Waelchi III|88|samanta_waelchi_iii@blanda.org sqlite> SELECT * FROM people WHERE id = 142; 142|Darryl Dicki|62|darryl_dicki@sawayn.net sqlite> SELECT * FROM people WHERE email = 'randy_grimes@grant.com'; 45|Randy Grimes|100|randy_grimes@grant.com

Here we see five different queries that give us a feel for the data. The first two are table scans that require the database to read through the records to locate the ones that meet our criteria. There's no index on the age field to speed that up. The next two queries are very efficient lookups by primary key and the final query is almost as optimized since it uses the email index.

These five queries represent our goal. We want to recreate these operations using just Elixir. Let's to it!

I Hope Someone Brought A Map

An entire SQLite database is stored in just one file. That file contains the structural definitions for our table and index, all of the records we loaded into the table, the index that allows us to find records quickly by email, and a bit more. How do we find exactly what we're after on this lush, tropical island? We need a map!

SQLite has got you covered. The file is predictable. While it can contain multitudes, it is constructed from simple abstractions that can be easily understood one by one. As we work our way through those layers, a map will emerge showing us precisely where the treasure we seek can be found.

The first abstraction is pages. While a large database might produce a huge file, all SQLite files are divided into equally sized chunks. One chunk is called a page. Each page contains a certain kind of data and can be dealt with largely independently from the other pages.

This means that the first step to understanding is just knowing the size of each page. Once we know that size, we can carve up the entire file and begin to make sense of the individual pieces. Therefore, it may not be too surprising to learn that all SQLite databases begin with a header that explains a handful of key details, including the page size! Let's read that header:

File.open!(db_path, [:read, :binary], fn f ->

<<"SQLite format 3\0"::binary,

raw_page_size::integer-big-unit(8)-size(2),

1::integer-big-unit(8)-size(1),

1::integer-big-unit(8)-size(1),

0::integer-big-unit(8)-size(1),

64::integer-big-unit(8)-size(1),

32::integer-big-unit(8)-size(1),

32::integer-big-unit(8)-size(1),

file_change_counter::integer-big-unit(8)-size(4),

page_count::integer-big-unit(8)-size(4),

_first_freelist_trunk_page::integer-big-unit(8)-size(4),

_freelist_pages::integer-big-unit(8)-size(4),

_schema_cookie::integer-big-unit(8)-size(4),

4::integer-big-unit(8)-size(4),

_default_page_cache_size::integer-big-unit(8)-size(4),

_largest_root_page_for_vacuum::integer-big-unit(8)-size(4),

1::integer-big-unit(8)-size(4),

_user_version::integer-big-unit(8)-size(4),

0::integer-big-unit(8)-size(4),

_application_id::integer-big-unit(8)-size(4),

0::integer-big-unit(8)-size(20),

file_change_counter::integer-big-unit(8)-size(4),

3_046_000::integer-big-unit(8)-size(4)>> = IO.binread(f, 100)

page_size =

case raw_page_size do

1 -> 65_536

_ -> raw_page_size

end

%{page_size: page_size, page_count: page_count}

end)

%{page_count: 7, page_size: 4096}

The first 100 bytes of a SQLite database file are a collection of integers that explain the various features contained within. The excellent documentation explains what each of those values indicate. The most important one to us is the raw_page_size. If you look through the pattern match above, you will see some other interesting details like a page_count. That can be helpful to see the structure of the database, but you could also get it by dividing the file size by the page size.

Now, it may look like we are ignoring many of these values. To some extent that is true. This tutorial is not going to concern itself with free pages, for example, as we would only need to know about those if we were looking for empty pages to write new data into. However, don't make the mistake of assuming that the parser we are building is a fragile snowflake. Pattern matching has allowed me to hardcode many expectations about the header. In the places where you see me matching against literal integer values I am ensuring that the database has the formats, schema versions, and text encoding that I expect. The final value even encodes the exact version of SQLite used to create the file 3_046_000 ("3.46.0"). If you try to use this code on anything it wasn't prepared to handle, it will bail out noisily at this very first step. That makes it a lot easier to trust the results we are getting.

The rest of the code performs a trivial conversion to produce the actual page size and the output shows us that our file contains seven 4k pages.

The above pattern match was purposefully done in a very verbose format to specifically match the values given in the documentation. Many of those options are defaults though and others have much shorter forms. Let's rewrite it into what we are more likely to encounter in the wild and wrap it in a function for easy reuse:

parse_header = fn bytes, f ->

<<"SQLite format 3\0",

raw_page_size::2*8,

1::1*8,

1::1*8,

0::1*8,

64::1*8,

32::1*8,

32::1*8,

file_change_counter::4*8,

page_count::4*8,

_first_freelist_trunk_page::4*8,

_freelist_pages::4*8,

_schema_cookie::4*8,

4::4*8,

_default_page_cache_size::4*8,

_largest_root_page_for_vacuum::4*8,

1::4*8,

_user_version::4*8,

0::4*8,

_application_id::4*8,

0::20*8,

file_change_counter::4*8,

3_046_000::4*8>> = bytes

page_size =

case raw_page_size do

1 -> 65_536

_ -> raw_page_size

end

%{file: f, page_size: page_size, page_count: page_count}

end

open_db = fn path, func ->

File.open!(path, [:read, :binary], fn f ->

db =

f

|> IO.binread(100)

|> parse_header.(f)

func.(db)

end)

end

open_db.(db_path, &Function.identity/1)

%{file: #PID<0.184.0>, page_count: 7, page_size: 4096}

The code above also includes a new function that opens a file, parses the header, and passes the key database details into an anonymous function we can provide for further processing of the contents.

We've found the pages. Now we have to make sense of them.

Climb a Tree, Dear

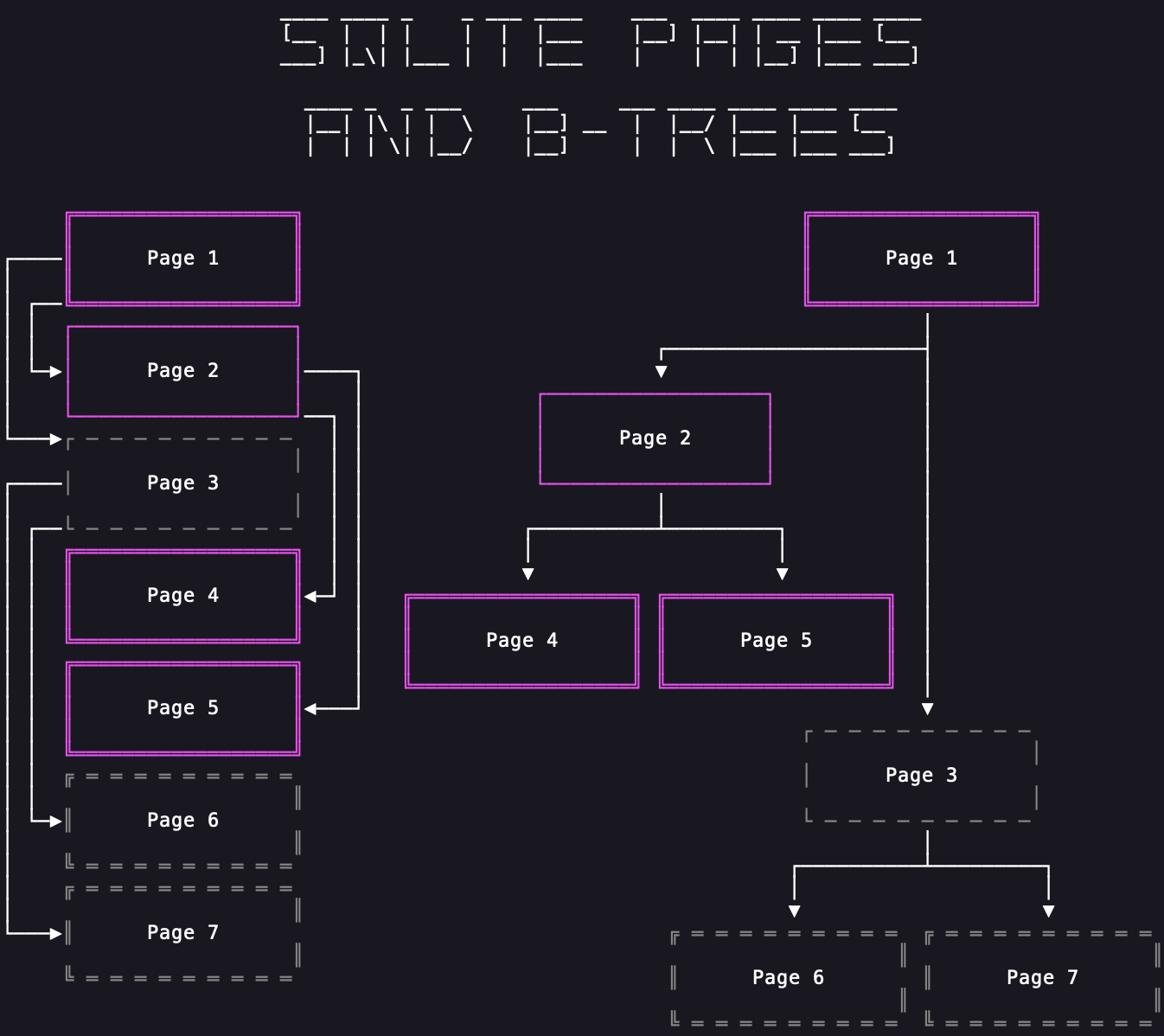

Somewhere in the seven pages of our database we know that we will find a table full of data and an index mapping email addresses to records in that table. Tables and indexes are stored in the second of SQLite's abstractions: B-trees. A B-tree is a handy tree format for efficiently storing large chunks of data (like pages) in a branching structure that can quickly get you to the data you seek. The tree itself is made of pages that point to other pages. If you know where the root page of a tree is, you can find all of the other pages.

Three of the seven pages are the tree that holds the data in our table. Another three are a tree that holds the index. The only other page, the first one in the database, holds an abstraction we'll look at a bit later that allows us to find both of those trees. The following image shows how these pages map to one another.

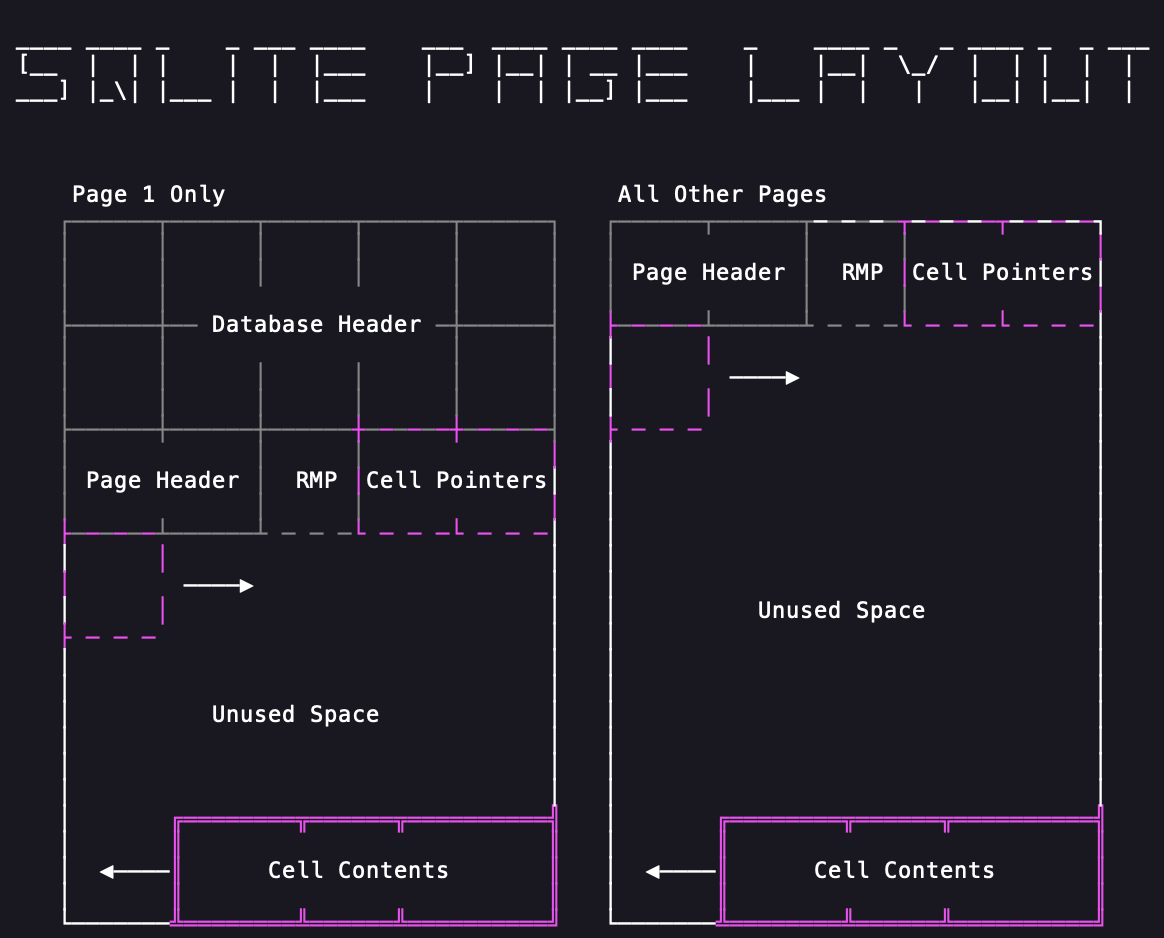

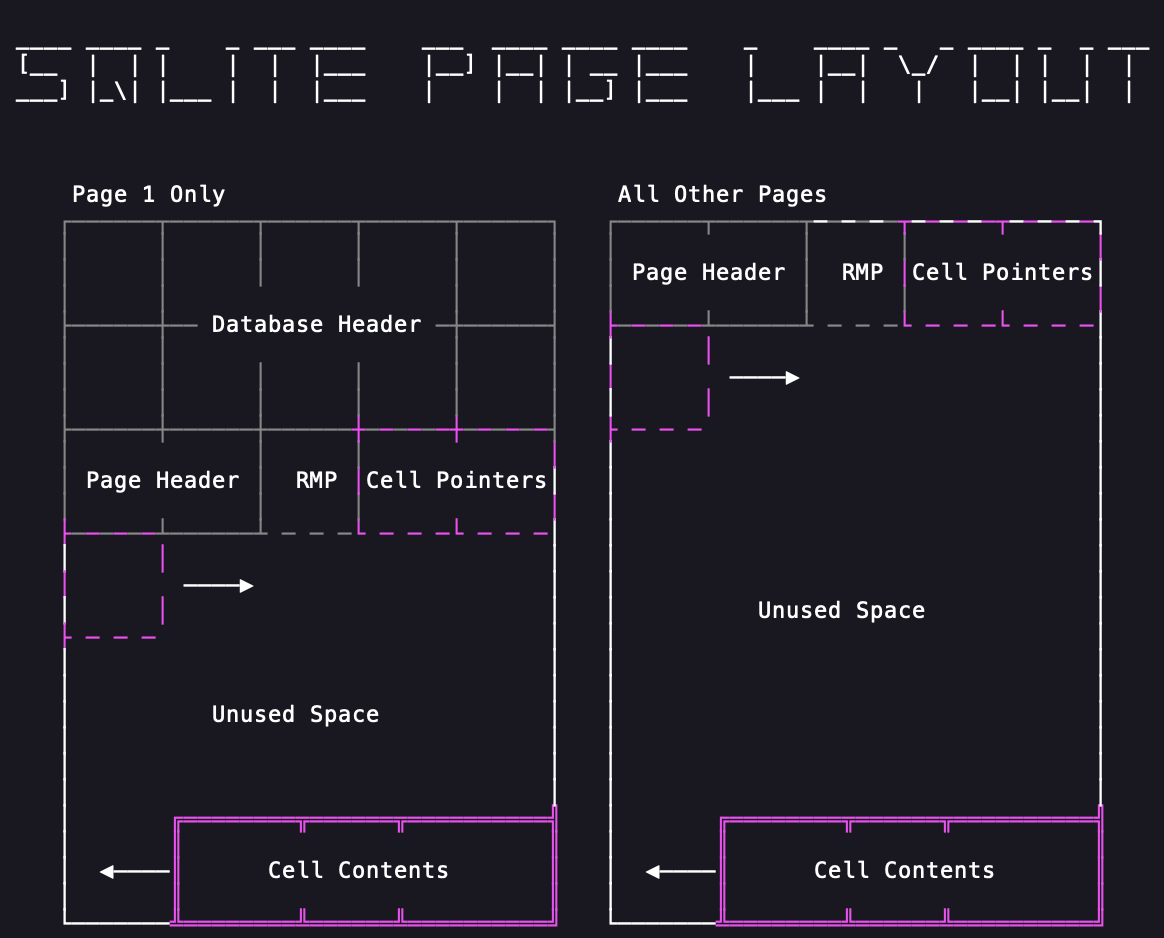

In order to follow the map of pages, we need to be able to read what is actually on them. Unsurprisingly, pages also have a predictable format including a header of their own that provides some key details about what you will find in its contents. The only gotcha when looking for this page header is to remember that the first 100 bytes of the database are the database header. That means that the header for page one starts 100 bytes in, but the header for all other pages is at the beginning of the page.

Again, we really only need a couple of key details from the page header to make sense of it. The first value we're interested in is the type of page that it is. Does it hold a table or an index? Is it the actual table data or does it just point to other pages that hold the table data?

We saw before that SQLite databases are just a collection of pages. Well, pages are just a collection of cells. That means the other key element we need to know are how many cells are on this page. That's in the header too. Let's parse them out:

parse_page = fn bytes, i ->

start = if i == 1, do: 100, else: 0

<<raw_type::1*8,

_first_page_freeblock::2*8,

cell_count::2*8,

_raw_cell_content_start::2*8,

_fragmented_free_bytes::1*8,

rest::binary>> = binary_slice(bytes, start, 12)

type =

case raw_type do

2 -> :interior_index

5 -> :interior_table

10 -> :leaf_index

13 -> :leaf_table

end

right_most_pointer =

if type in [:interior_index, :interior_table] do

<<right_most_pointer::4*8>> = rest

right_most_pointer

else

nil

end

%{

index: i,

start: start,

type: type,

cell_count: cell_count,

right_most_pointer: right_most_pointer

}

end

read_page = fn %{page_count: last_page} = db, i when i > 0 and i <= last_page ->

:file.position(db.file, (i - 1) * db.page_size)

db.file

|> IO.binread(db.page_size)

|> parse_page.(i)

end

open_db.(db_path, fn db ->

Enum.map(1..3//1, fn i ->

read_page.(db, i)

end)

end)

[

%{

index: 1,

start: 100,

type: :leaf_table,

cell_count: 2,

right_most_pointer: nil

},

%{

index: 2,

start: 0,

type: :interior_table,

cell_count: 1,

right_most_pointer: 5

},

%{

index: 3,

start: 0,

type: :interior_index,

cell_count: 1,

right_most_pointer: 7

}

]

This code is pretty similar to the earlier code that parsed the database header. We match some raw values, do some minor clean up, and return them in a data structure telling you what you need to know. You can see that I've pulled out some other details, like the right_most_pointer. We'll get to what that is in a bit.

Here we also see a new function for reading pages out of the file. Given the index of any page we want to see, some simple math can tell us how many bytes of other pages to skip past. We can then read one chunk of page size bytes to get that page.

The end of this example uses that header parser and that page reading function to extract some details about the first three pages. Don't worry too much about the first page just yet, but take note of 2 and 3. One of them is a table page that will eventually lead us to the other two table pages and the other is the index counterpart. To follow those leads, we will need to make sense of the cells.

Living Things Are Made Of Cells

Conceptually, the cells in a page are just a list of values. When I show you how those values are laid out it may initially seem strange, but it makes a lot more sense when you think about how databases can change. Let's look at a trivial example:

8whatever5small8whatever8whatever8whatever

Cells could just be laid out in order after the header. Each cell could begin with a number telling you how many bytes to read and be immediately followed by that content. The example above shows roughly what this would look like (although it is not in binary). Now consider that someone issues a SQL statement to modify the small field to be quiteabitbigger:

8whatever15quiteabitbigger8whatever8whatever8whatever

In order to accommodate this change most of the page has to be moved. All later values had to be shifted in order to make space for the now larger field. SQLite wants to minimize the need for changes due to edits.

To support that, it stores two things for each cell. The first is a pointer to where the cell content is in the page and then, at that location, it stores the actual content of the cell. When changes modify cells, SQLite can place the new content in any convenient space and simply adjust the pointer numbers to direct us to the right things. Have a fresh look at this earlier diagram:

The cell pointers are those simple numbers following immediately after the header and growing towards the end of the page. The cell contents themselves will start at the end of the page and grow backwards toward the beginning. This leaves a large chunk of empty space in the middle for use when edits cause changes. Here's an animation of how SQLite will layout the first three cells as they are added:

Luckily, understanding cell layout is harder than actually parsing the cells. We just need to read some numbers after the header and follow those to extract the contents.

This does require another new element of the SQLite format called a varint. You'll see the code to parse these special numbers in the following example, but I'm not going to explain them just yet. I promise that we will get there soon. In the meantime, let's read some cell contents:

parse_varint = fn bytes, start ->

Enum.reduce_while(0..8, {0, 0}, fn offset, {int, size} ->

<<high_bit::1, new_int::7>> = binary_part(bytes, start + offset, 1)

cond do

size == 8 -> {:halt, {Bitwise.bsl(int, 8) + new_int, size + 1}}

high_bit == 0 -> {:halt, {Bitwise.bsl(int, 7) + new_int, size + 1}}

true -> {:cont, {Bitwise.bsl(int, 7) + new_int, size + 1}}

end

end)

end

parse_cells = fn bytes, page ->

cell_start = page.start + if is_nil(page.right_most_pointer), do: 8, else: 12

cells =

0..(page.cell_count - 1)//1

|> Enum.map(fn i ->

<<content_start::2*8>> = binary_part(bytes, i * 2 + cell_start, 2)

content_start

end)

|> Enum.map(fn content_start ->

case page.type do

:interior_index ->

<<left_child_pointer::4*8>> = binary_part(bytes, content_start, 4)

{payload_bytes, p_size} = parse_varint.(bytes, content_start + 4)

{

left_child_pointer,

binary_part(bytes, content_start + 4 + p_size, payload_bytes)

}

:interior_table ->

<<left_child_pointer::4*8>> = binary_part(bytes, content_start, 4)

{integer_key, _size} = parse_varint.(bytes, content_start + 4)

{left_child_pointer, integer_key}

:leaf_index ->

{payload_bytes, p_size} = parse_varint.(bytes, content_start)

binary_part(bytes, content_start + p_size, payload_bytes)

:leaf_table ->

{payload_bytes, p_size} = parse_varint.(bytes, content_start)

{rowid, i_size} = parse_varint.(bytes, content_start + p_size)

{

rowid,

binary_part(bytes, content_start + p_size + i_size, payload_bytes)

}

end

end)

Map.put(page, :cells, cells)

end

read_page = fn %{page_count: last_page} = db, i when i > 0 and i <= last_page ->

:file.position(db.file, (i - 1) * db.page_size)

bytes = IO.binread(db.file, db.page_size)

page = parse_page.(bytes, i)

parse_cells.(bytes, page)

end

open_db.(db_path, fn db -> read_page.(db, 1) end)

%{

index: 1,

start: 100,

type: :leaf_table,

cell_count: 2,

right_most_pointer: nil,

cells: [

{1,

<<7, 23, 25, 25, 1, 129, 119, 116, 97, 98, 108, 101, 112, 101, 111, 112,

108, 101, 112, 101, 111, 112, 108, 101, 2, 67, 82, 69, 65, 84, 69, 32,

84, 65, 66, 76, 69, 32, 112, 101, 111, ...>>},

{2,

<<6, 23, 37, 25, 1, 111, 105, 110, 100, 101, 120, 112, 101, 114, 115, 111,

110, 95, 101, 109, 97, 105, 108, 112, 101, 111, 112, 108, 101, 3, 67, 82,

69, 65, 84, 69, 32, 85, 78, 73, ...>>}

]

}

That's a lot of code, so let's break it down. Again, for now, just think of the code that parses varints as a magical number reader. Inside the cell parsing code, we first check if this is a kind of page that would include a right_most_pointer. When that's present, it pushes the start of the cells a little further into the page.

Immediately after that check is where the action is. That first call to Enum.map/2 is what reads all the cell pointers, converting them into simple integers that show where the content for that cell starts. The following call to Enum.map/2 is what actually reads those contents. Unfortunately, cell contents are slightly different for the four different types of pages in our database. That's why this section branches on the page type and uses four different techniques.

This code redefines read_page/2 to add in cell parsing whenever we fetch one.

We will talk about what those cells actually hold in a moment, but you can already see from the output that we have managed to extract some kind of structured data pairs of numbered binary blobs. Onward to deblobification!

Integers of Varying Sizes

Many things in binary formats involve telling you what you are about to read, and potentially the size of it, then giving you the thing to read. The way to tell you what to read is to put some magic number in front of it. That number can probably be pretty small. If there are less than 256 things you could need to read, one byte will do. Of course, let's say you are told to read some arbitrarily long text that the user typed in. Now we need another number to tell you how long the text is. How large does that size indicator need to be? Well, it would have to be large enough to encode the maximally large text that a user could enter. But if we throw these large numbers around everywhere and users only enter ten digits at a time, we're wasting some serious space.

Varints are the cure for this disease. They are a way to encode an integer of varying lengths. If you only need a small number, they take up one byte. They scale all the way up to nine bytes for the largest values that SQLite supports. To read one, you keep going until you read a byte where the highest-order bit is a 0 or you reach the ninth byte. That sounds more complex than it is. Here are all of the possible combinations:

0bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 0bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb 1bbbbbbb bbbbbbbb

The 1 or 0 at the beginning of each byte tells you whether to keep reading or stop respectively. You don't need it for the bottom case because you always stop if you read nine bytes. The b bits are what you actually combine to form the numbers.

This system makes it easy for SQLite to encode values using only the number of bytes needed, without a lot of extra padding. And why does that matter? Records, my good friend. Records.

Building a Record Player

Databases are made up of pages, organized into B-trees. Pages are made up of cells. Cells are made up of records. Are we having fun yet?

That binary blob we saw when we parsed out the cell values is a record. A record is a header indicating the types and sizes of all the fields that follow, immediately followed by the contents of those fields. As the table at the link shows, a type 1 means you are reading a single byte integer for that field while a 6 is an eight byte integer. Odd values above 13 are a way to indicate textual content and a size with one number. Be sure to look at the table. It's clever!

The following code is an Elixirification of that table:

parse_record = fn bytes ->

{header_bytes, h_size} = parse_varint.(bytes, 0)

h_size

|> Stream.unfold(fn read_bytes ->

if read_bytes < header_bytes do

{column, c_size} = parse_varint.(bytes, read_bytes)

{column, read_bytes + c_size}

else

nil

end

end)

|> Enum.to_list()

|> Enum.map_reduce(header_bytes, fn

0, read_bytes ->

{nil, read_bytes}

1, read_bytes ->

<<int::1*8>> = binary_part(bytes, read_bytes, 1)

{int, read_bytes + 1}

2, read_bytes ->

<<int::2*8>> = binary_part(bytes, read_bytes, 2)

{int, read_bytes + 2}

3, read_bytes ->

<<int::3*8>> = binary_part(bytes, read_bytes, 3)

{int, read_bytes + 3}

4, read_bytes ->

<<int::4*8>> = binary_part(bytes, read_bytes, 4)

{int, read_bytes + 4}

5, read_bytes ->

<<int::6*8>> = binary_part(bytes, read_bytes, 6)

{int, read_bytes + 6}

6, read_bytes ->

<<int::8*8>> = binary_part(bytes, read_bytes, 8)

{int, read_bytes + 8}

7, read_bytes ->

<<flt::8*8>> = binary_part(bytes, read_bytes, 8)

{flt, read_bytes + 8}

8, read_bytes ->

{0, read_bytes}

9, read_bytes ->

{1, read_bytes}

n, read_bytes when n >= 12 and rem(n, 2) == 0 ->

size = div(n - 12, 2)

text = binary_part(bytes, read_bytes, size)

{text, read_bytes + size}

n, read_bytes when n >= 13 and rem(n, 2) == 1 ->

size = div(n - 13, 2)

text = binary_part(bytes, read_bytes, size)

{text, read_bytes + size}

end)

|> elem(0)

end

read_page = fn %{page_count: last_page} = db, i when i > 0 and i <= last_page ->

:file.position(db.file, (i - 1) * db.page_size)

bytes = IO.binread(db.file, db.page_size)

page = parse_page.(bytes, i)

page = parse_cells.(bytes, page)

Map.update!(page, :cells, fn cells ->

Enum.map(cells, fn

bytes when is_binary(bytes) -> parse_record.(bytes)

{other, bytes} when is_binary(bytes) -> {other, parse_record.(bytes)}

cell -> cell

end)

end)

end

open_db.(db_path, fn db -> read_page.(db, 1) end)

%{

index: 1,

start: 100,

type: :leaf_table,

cell_count: 2,

right_most_pointer: nil,

cells: [

{1,

["table", "people", "people", 2,

"CREATE TABLE people (\n id INTEGER PRIMARY KEY,\n name TEXT NOT NULL,\n age INTEGER NOT NULL,\n email TEXT NOT NULL\n)"]},

{2,

["index", "person_email", "people", 3,

"CREATE UNIQUE INDEX person_email ON people(email)"]}

]

}

The function heads inside of the Enum.map_reduce/3 call line up perfectly with the table from the documentation. This code also redefines read_page/2 yet again to add in the new cell decoding functionality.

As you can see from the output, cell blob content has turned into meaningful data. Page one of a SQLite database is another B-tree. It just so happens that ours is small enough for the whole thing to fit on one page without any redirecting pages needed. That initial B-tree points to a SQL table describing all of the SQL objects encoded in this database file. It includes the page each item starts on among other details. This finally shows how I knew that our table began on page two and our index was on page three.

Lookup Tables

Now that we understand the first page, let's look at a SQL table that spans multiple pages:

open_db.(db_path, fn db -> read_page.(db, 2) end)

%{

index: 2,

start: 0,

type: :interior_table,

cell_count: 1,

right_most_pointer: 5,

cells: [{4, 79}]

}

We know from earlier diagrams in this post that the content of our database table begins on page two and continues on pages four and five. If you look closely at the data structure above for page two, you will see that it does point to 4 and 5.

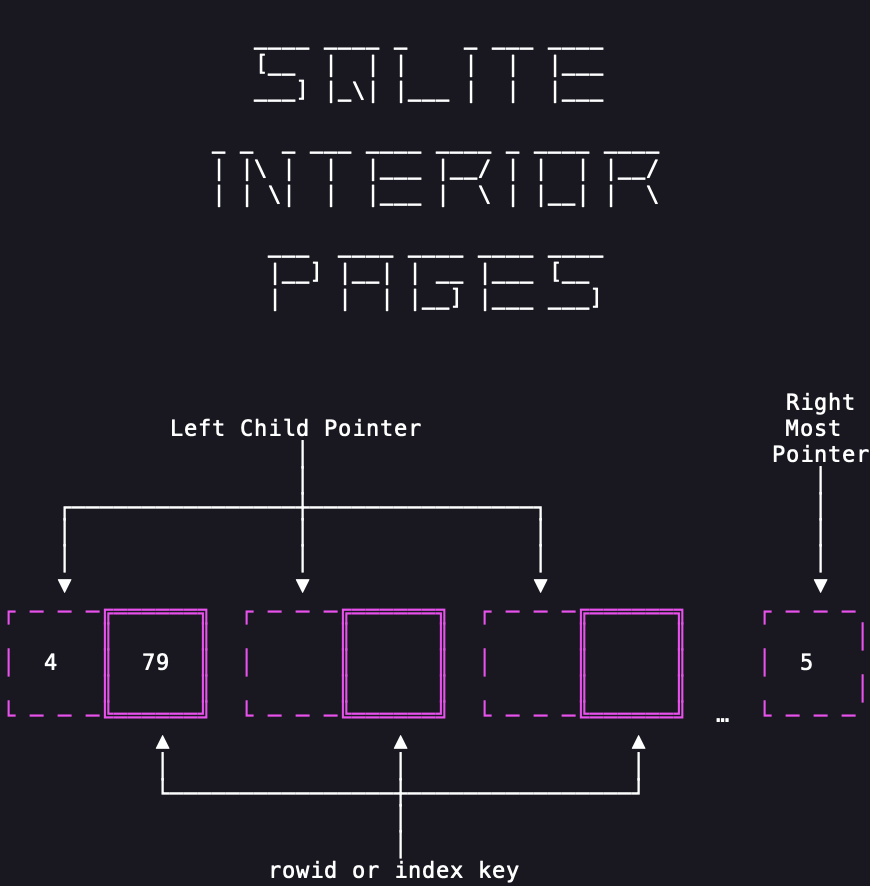

Cells of interior pages, the pages that lead to other pages, are pairs of page numbers (called a left child pointer) and keys. For the table above, there is only one cell and it contains the page number 4 paired with the key 79. These pairs form a conceptual mapping where the page number preceding each key is the page where you will find all records with a rowid, an autoincrementing integer key, less than or equal to that key. When none of those cells contains the key you are looking for, you follow the right_most_pointer to the last page in the list. Here's a diagram of how the lookup cells relate to each other:

Indexes have a very similar structure for their pages that point to other pages. Let's look at our index to see this:

open_db.(db_path, fn db -> read_page.(db, 3) end)

%{

index: 3,

start: 0,

type: :interior_index,

cell_count: 1,

right_most_pointer: 7,

cells: [{6, ["micah_beahan@douglas.biz", 18]}]

}

The above is still a pair of page number and "key." However, the key is a little more complex. It now contains all of the fields we are indexing on (just email in our case) followed by the rowid of the matching record in the actual table.

This is used in pretty much the same way. Emails less than (speaking in a manner of textual sorting) would be on the page number on the left, while greater emails would be on the right_most_pointer page. In indexes, the record that exactly matches the key would not appear on a deeper page, because finding it during a lookup means you already know what you need to know: the rowid to lookup in the actual table.

The rest of the index is pretty unsurprising. It just contains that email and rowid pairs. Here's a look at one of those pages:

open_db.(db_path, fn db -> read_page.(db, 6) end)

%{

index: 6,

start: 0,

type: :leaf_index,

cell_count: 81,

right_most_pointer: nil,

cells: [

["ada_bauch@bechtelar.name", 144],

["albin_weber@wyman.com", 113],

["alexandra_morissette@mayer.info", 48],

["alfonzo_gusikowski@osinski.org", 133],

["ali_ortiz@fahey.info", 97],

["anibal_hudson@streich.biz", 136],

["ansel_kulas@leffler.biz", 150],

["ara_glover@zboncak.com", 62],

["arnoldo_hodkiewicz@thiel.info", 86],

["ashleigh_dietrich@okon.biz", 74],

["autumn_halvorson@conroy.biz", 106],

["barney_walker@dooley.com", 63],

["beth_fay@stiedemann.biz", 57],

["bette_gottlieb@harber.info", 129],

["blaise_sporer@bins.info", 56],

["blake_kulas@wiza.org", 126],

["bradford_schowalter@okeefe.biz", 100],

["breana_bergnaum@von.com", 107],

["cesar_schmidt@fahey.org", 75],

["chelsey_waters@stanton.net", 82],

["colten_kub_dds@nolan.info", 27],

["dakota_d_amore@heidenreich.biz", 120],

["dale_gorczany@jerde.com", 72],

["daphney_carroll@jast.name", 80],

["daren_keeling@collins.name", 7],

["dario_brown@dibbert.net", 36],

["darryl_dicki@sawayn.net", 142],

["dayne_goodwin@gusikowski.net", 134],

["deshaun_gutkowski@sipes.biz", 13],

["donna_wuckert@mills.name", 31],

["dr_lee_bruen_ii@bogisich.net", 22],

["dr_taylor_frami@roberts.biz", 71],

["dr_vidal_cartwright_i@cummerata.net", 127],

["ed_goyette@wuckert.net", 76],

["edward_cummerata@lockman.biz", 9],

["edyth_medhurst@hauck.net", 146],

["elouise_borer@ruecker.biz", 44],

["erick_strosin@schneider.info", 95],

["esteban_stracke@batz.biz", 110],

["etha_mohr@kerluke.net", 102],

["frances_grant_ii@vandervort.name", 24],

["gage_schaefer@waters.name", 105],

["gardner_fritsch_dvm@nicolas.info", ...],

[...],

...

]

}

Query Time

We now understand all of the abstractions needed to make sense of this database. Let's reproduce the five queries at the beginning of this article using Elixir. The first thing that we need is a function that can walk through an entire B-tree yielding the contents as they are passed:

stream_table = fn db, root_page ->

Stream.resource(

fn -> [{read_page.(db, root_page), 0}] end,

fn

[] ->

{:halt, []}

[{%{type: :leaf_table} = page, 0} | rest] ->

{page.cells, rest}

[{%{cell_count: cell_count} = page, cell_count} | rest] ->

{[], [{read_page.(db, page.right_most_pointer), 0} | rest]}

[{page, i} | rest] ->

{next_page, _rowid} = Enum.at(page.cells, i)

{[], [{read_page.(db, next_page), 0}, {page, i + 1} | rest]}

end,

fn _stack -> :noop end

)

end

open_db.(db_path, fn db -> db |> stream_table.(1) |> Enum.to_list() end)

[

{1,

["table", "people", "people", 2,

"CREATE TABLE people (\n id INTEGER PRIMARY KEY,\n name TEXT NOT NULL,\n age INTEGER NOT NULL,\n email TEXT NOT NULL\n)"]},

{2,

["index", "person_email", "people", 3,

"CREATE UNIQUE INDEX person_email ON people(email)"]}

]

I've decided to represent table B-trees as Elixir Streams. This allows us to use the full suite of iterators to dig through them as needed.

The second thing we need is a way to link field definitions with the values we find in the tables. SQLite handles this by giving you the SQL used to create the table in the database schema listing. Let's add some code to parse those out when the database is opened:

parse_schema = fn db ->

sqlite_schema = [

type: "TEXT",

name: "TEXT",

tbl_name: "TEXT",

rootpage: "INTEGER",

sql: "TEXT"

]

schema =

db

|> stream_table.(1)

|> Enum.into(%{"sqlite_schema" => {1, sqlite_schema}}, fn {_rowid, values} ->

row = Enum.zip(Keyword.keys(sqlite_schema), values)

fields =

case Keyword.fetch!(row, :type) do

"table" ->

~r{(\w+)\s+([^,\(\)]+?)\s*[,\)]}

|> Regex.scan(Keyword.fetch!(row, :sql))

|> Enum.map(fn [_match, name, definition] ->

{String.to_atom(name), definition}

end)

"index" ->

~r{(\w+)\s*[,\)]}

|> Regex.scan(Keyword.fetch!(row, :sql))

|> Enum.map(fn [_match, name] -> String.to_atom(name) end)

_other ->

[]

end

key =

if Keyword.fetch!(row, :type) == "table" do

Keyword.fetch!(row, :name)

else

"#{Keyword.fetch!(row, :tbl_name)}:#{Keyword.fetch!(row, :name)}"

end

{key, {Keyword.fetch!(row, :rootpage), fields}}

end)

Map.put(db, :schema, schema)

end

open_db = fn path, func ->

File.open!(path, [:read, :binary], fn f ->

db =

f

|> IO.binread(100)

|> parse_header.(f)

db

|> parse_schema.()

|> func.()

end)

end

open_db.(db_path, fn db -> db end)

%{

file: #PID<0.192.0>,

page_count: 7,

page_size: 4096,

schema: %{

"people" => {2,

[

id: "INTEGER PRIMARY KEY",

name: "TEXT NOT NULL",

age: "INTEGER NOT NULL",

email: "TEXT NOT NULL"

]},

"people:person_email" => {3, [:email]},

"sqlite_schema" => {1,

[

type: "TEXT",

name: "TEXT",

tbl_name: "TEXT",

rootpage: "INTEGER",

sql: "TEXT"

]}

}

}

Don't loose a lot of sleep over this crude parsing code. I used regular expressions to pull out the values we need to care about for these examples. Obviously, SQLite has a SQL parser that it can leverage to understand these definitions. That's outside the scope of what we are trying to do here.

The above code redefines open_db/2 to always perform this schema parsing and add the yielded field definitions along with the root page numbers to the database data structure. We can combine those definitions with the table records to produce a full table scan:

build_table_row = fn fields, {rowid, values} ->

Enum.zip_with(fields, values, fn {name, definition}, v ->

v =

if is_nil(v) and definition == "INTEGER PRIMARY KEY" do

rowid

else

v

end

{name, v}

end)

end

scan_table = fn db, table_name ->

{root_page, fields} = Map.fetch!(db.schema, table_name)

db

|> stream_table.(root_page)

|> Stream.map(fn id_and_values -> build_table_row.(fields, id_and_values) end)

end

open_db.(db_path, fn db ->

db

|> scan_table.("people")

|> Enum.take(10)

end)

[

[id: 1, name: "Margret Ebert", age: 39, email: "margret_ebert@bahringer.net"],

[id: 2, name: "Thad Pouros", age: 74, email: "thad_pouros@rodriguez.com"],

[id: 3, name: "Susana Torp", age: 50, email: "susana_torp@ebert.biz"],

[

id: 4,

name: "Mr. Michele Stroman",

age: 84,

email: "mr_michele_stroman@white.name"

],

[id: 5, name: "Kenna Okuneva", age: 49, email: "kenna_okuneva@koch.biz"],

[id: 6, name: "Irwin Kohler", age: 61, email: "irwin_kohler@ledner.com"],

[id: 7, name: "Daren Keeling", age: 89, email: "daren_keeling@collins.name"],

[id: 8, name: "Viola Beer V", age: 73, email: "viola_beer_v@bayer.org"],

[

id: 9,

name: "Edward Cummerata",

age: 47,

email: "edward_cummerata@lockman.biz"

],

[

id: 10,

name: "Nathan Halvorson",

age: 98,

email: "nathan_halvorson@kuhlman.biz"

]

]

This is the first query we ran back at the beginning of this article. It's worth noting that, now that we have all the needed abstractions, the final call is essentially Enum.take(table, 10). Scanning for specific ages isn't much harder:

open_db.(db_path, fn db ->

db

|> scan_table.("people")

|> Enum.filter(fn row -> Keyword.fetch!(row, :age) >= 97 end)

end)

[

[

id: 10,

name: "Nathan Halvorson",

age: 98,

email: "nathan_halvorson@kuhlman.biz"

],

[id: 27, name: "Colten Kub DDS", age: 98, email: "colten_kub_dds@nolan.info"],

[id: 45, name: "Randy Grimes", age: 100, email: "randy_grimes@grant.com"],

[

id: 46,

name: "Mrs. Madisen Bashirian DVM",

age: 100,

email: "mrs_madisen_bashirian_dvm@prosacco.name"

],

[id: 59, name: "Paula Larkin", age: 99, email: "paula_larkin@abshire.com"],

[id: 63, name: "Barney Walker", age: 98, email: "barney_walker@dooley.com"],

[

id: 80,

name: "Daphney Carroll",

age: 100,

email: "daphney_carroll@jast.name"

],

[

id: 94,

name: "Rickey Balistreri DVM",

age: 97,

email: "rickey_balistreri_dvm@kuvalis.info"

],

[

id: 100,

name: "Bradford Schowalter",

age: 100,

email: "bradford_schowalter@okeefe.biz"

]

]

Maximum Warp, Engage

The three remaining queries are meant to be more efficient than a table scan. They can skip right to the needed data using primary keys or supporting indexes. As we've seen, the way SQLite tables are stored, they are basically their own index for autoincrementing integer primary keys. Let's write the code to walk those B-trees efficiently:

lookup_by_id = fn db, id ->

{root_page, fields} = Map.fetch!(db.schema, "people")

Stream.iterate(read_page.(db, root_page), fn

%{type: :leaf_table} = page ->

with id_and_values when is_tuple(id_and_values) <-

Enum.find(page.cells, fn {rowid, _values} -> rowid == id end) do

build_table_row.(fields, id_and_values)

end

page ->

next_page =

page.cells

|> Enum.find({page.right_most_pointer, nil}, fn {_pointer, rowid} ->

id <= rowid

end)

|> elem(0)

read_page.(db, next_page)

end)

|> Enum.find(fn found -> not is_map(found) end)

end

open_db.(db_path, fn db -> lookup_by_id.(db, 77) end)[ id: 77, name: "Samanta Waelchi III", age: 88, email: "samanta_waelchi_iii@blanda.org" ]

This code still streams the content, but it's smarter about how it moves from page to page. Since we pass in an id, it can check the keys to see which branches of the tree to follow, skipping any pages that won't lead to what we're after. When it reaches a leaf page, it knows the desired value must be on this page (assuming it exists) and a simple Enum.find/2 is used to retrieve it.

We know this first lookup was on page 4, because the id was less than 79. Let's do one more lookup to force it to take the other path to page 5:

open_db.(db_path, fn db -> lookup_by_id.(db, 142) end)[id: 142, name: "Darryl Dicki", age: 62, email: "darryl_dicki@sawayn.net"]

This final query uses a very similar strategy, but for the email index. The differences are:

- We are checking emails instead of ID's

- Exactly matching the key on an interior page ends the search without continuing to a leaf page

- When the email lookup succeeds, we use the resulting ID to perform a table lookup and get the actual record

Here's the code:

lookup_by_email = fn db, email ->

{root_page, _fields} = Map.fetch!(db.schema, "people:person_email")

key =

Stream.iterate(read_page.(db, root_page), fn

%{type: :leaf_index} = page ->

Enum.find(page.cells, fn [e, _rowid] -> email == e end)

page ->

match =

Enum.find(

page.cells,

{page.right_most_pointer, nil},

fn {_pointer, [e, _rowid]} -> email <= e end

)

case match do

{_pointer, [e, rowid]} when email == e -> [email, rowid]

{pointer, _key} -> read_page.(db, pointer)

end

end)

|> Enum.find(fn found -> not is_map(found) end)

case key do

[_email, rowid] -> lookup_by_id.(db, rowid)

failed_match -> failed_match

end

end

open_db.(db_path, fn db ->

lookup_by_email.(db, "randy_grimes@grant.com")

end)[id: 45, name: "Randy Grimes", age: 100, email: "randy_grimes@grant.com"]

Go Forth and Parse Things

The code above doesn't handle every possible situation in a SQLite database. The most notable omission is that it's possible for large content to overflow a page and end up on subsequent pages. A real solution needs to identify those cases and follow the leads.

However, we've handled a huge chunk of the SQLite file specification, securely and efficiently. We used some random file access, streaming content, and some seriously powerful binary pattern matching to make sense of a lot of data. These simple tools are very powerful ways to break down the content we are faced with.

If you enjoyed this post, I compare the parsing strategies used here with other techniques, like lexer and parser generators, in The Wild World of Parsing Livebook from my How to Train Your Scrappy Programmer series.

Discussion of this article is welcome on Elixir Forum.